|

BY KEVIN WEINER

New OHBM Communications Committee article on HuffPost Science: "As a scientific conference approaches, I always think back to my first science fair: I stood next to my homemade presentation of graphs and tables glued to a poster board positioned next to hundreds of others made by fellow elementary school students. An overweight Paisan from rural New Jersey, I was more looking forward to my post-presentation reward of cannoli from my favorite bakery in South Philly than I was to standing for hours on end answering questions from the judges. Conferences these days are pretty similar to the science fairs from decades ago, just on a grander scale with better technology. For example, every year the Organization for Human Brain Mapping (OHBM) brings together thousands of scientists who work with brain imaging data from around the world to share hot off the press findings that they just published or are preparing to publish. This year, 4,391 presentations will be on hand in Geneva for our annual conference." Read more

0 Comments

BY LISA NICKERSON

The old adage “there’s something for everyone” is an understatement when it comes to the representation of imaging data analysis techniques at the OHBM Annual Meeting. From courses and workshops on the most basic fundamentals of analysis to oral sessions and symposia highlighting work at the forefront of analytical methods development, the annual OHBM meeting is unparalleled in this regard. As a young graduate student and later as post-doc, OHBM drew me in as one of the best resources for learning about imaging data analysis. Throughout the year, I would spend countless hours, days, and even months combing through the literature and the internet trying to determine what information was reliable or most relevant for my work, scouring the SPM and FSL forums for answers to my questions, and generally being frustrated at how long it took to get the answers I needed to make headway on various analysis issues. The OHBM Educational Courses and Morning Workshops offered me an opportunity to learn from experts, meet them, and ask them my questions directly. This is the only conference I know that places such a strong emphasis on imaging data analysis, and I advise all my trainees and collaborators who are trying to learn analysis to go to OHBM to soak it in. This year, the opportunities for learning actually begin before the OHBM meeting starts with several Satellite Meetings taking place right before the conference, including: FSL Course 2016, Pattern Recognition in Neuroimaging, Brain Connectivity, and the BrainMap/Mango Workshop. In addition, the OHBM Educational Courses take place on Sunday before the Opening Ceremonies, with several courses that are fantastic for students, post-docs, those who are new to neuroimaging, and those who just want to pick up new analysis techniques.

Excerpt from OHBM Communications/Media Team article on Huff Post Science:

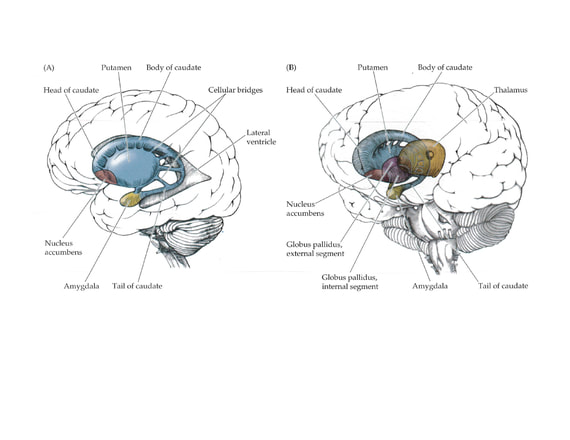

"You may have heard the basal ganglia being mentioned in mainstream media recently associated with movement disorders like Parkinson’s Disease, which burdened the late Muhammed Ali. And rightly so - the role of the basal ganglia is mostly understood as related to the formation, execution, and remembrance of a sequence of movements towards a goal like throwing a punch for Ali or walking for us." Read full story. BY NIKO KRIEGESKORTE I'm here with Professor Daniel Wolpert of the Engineering Department at Cambridge University. Daniel is going to give the Talairach Lecture this year at the OHBM meeting in Geneva. I’d like to hear a little bit about his research, about his lecture, and his view of our field.

Excerpt from OHBM Communications/Media Team article on Huff Post Healthy Living:

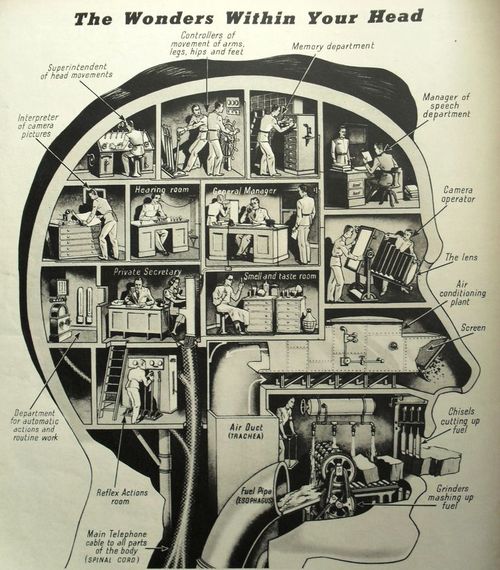

"The brain is commonly intuitively regarded as a collection of separate regions each with distinct functions of complex behaviors, experiences and phenomena. But, “Fear centers” and “planning centers” are not separate rooms in our brains in which neurons ring alarm bells and draft flow charts, respectively. " Read more |

BLOG HOME

Archives

January 2024

|

RSS Feed

RSS Feed