|

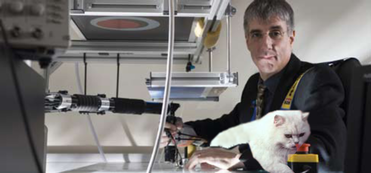

BY NIKO KRIEGESKORTE I'm here with Professor Daniel Wolpert of the Engineering Department at Cambridge University. Daniel is going to give the Talairach Lecture this year at the OHBM meeting in Geneva. I’d like to hear a little bit about his research, about his lecture, and his view of our field.

My Director of Studies at Oxford said to me “Modeling the brain is all where it’s at.” And he showed me a paper by Zipser and Andersen, which had just come out in Nature. They were using a simple neural network to model visual cortex. I was completely transfixed! So I decided I’d had enough of medicine. It was too much like hard work. I wanted to go back and do basic science. After getting my PhD, I thought it was time to actually go and learn some mathematics. So I went to MIT. I had my interview with Michael Jordan who said “If you want to come and do a postdoc here you have to know what a Jacobian is. If you don't know what a Jacobian is, don't come here.” I said “That's not a problem.” I had no clue what a Jacobian was! I went straight back to the hotel room and read up all about them. And I did a postdoc there which was great fun. MIT was such a vibrant place.  NK: How did you end up at the engineering department in Cambridge? DW: I was at the University College London for ten years where I was, I guess, the most computational person in a biological environment. And one day I got a phone call from Keith Peters, who was the head of the [Cambridge University] medical school, saying "Are you interested in a chair in Engineering at Cambridge?" And I said, "I think you've got the wrong person. I'm not an engineer. I'm a neuroscientist!" He said, “We're not idiots in Cambridge. We know what you do! We want to create a bio-engineering programme and what we really want to hire is not an engineer with an interest in biology, but a biologist with an interest in engineering." This was very attractive to me, because going from being the most mathematical in a biology department to being the least mathematical in an engineering department would be very good for my game. So I moved to Cambridge and we set up this group which does computation and biological learning. The idea is that we do both machine learning and neuroscience. The interplay between the machine learning and the neuroscience, I think, is absolutely vital. I think the idea that you can understand the brain without very strong computational support is ridiculous NK: What’s your lecture at OHBM in Geneva going to be about? DW: The first thing I want to convince people of is that there are interesting questions about motor control. I want to convince people that actually the only point in understanding cognition and sensation and perception is to guide action. Then I want to go through the different levels we’ve been working on to try and really explain the interesting new developments, both at the low level – sensory-motor noise, our work on normative models of Bayesian processing – and at the learning level: how people learn structures of tasks. Finally, I want to cover our more recent work, trying to link decision making and motor control together: How motor control affects decisions and how decisions affect motor control. I’ll try to give everyone a bit of an understanding of the algorithms we think the brain uses. Now I have to admit that we don’t do imaging in my group. But I’d like to inspire imagers to pick up our ideas, ideally, and go and test them using their techniques!

But the problem is they’re intractable. So although you can say you should do the Bayesian thing, actually doing the Bayesian thing is intractable for computers and probably intractable for the brain. So I think all the smart money at the moment is asking what the clever approximations are that the brain can use to solve these sorts of problems. And so the group which I'm head of is half machine learning and half neuroscience. The machine learners’ goal is not just saying it’s Bayesian, but asking how can we do these complex computations in efficient ways. And I think they've been very successful. We used to think that the way you did control was to have some cost and some desired trajectory. You have to play out the desired trajectory, and if you get perturbed, you update your plan. That's a very inefficient way to do control. All you basically need is an optimal feedback controller.

NK: Looking at your work, it seems to me we can learn a lot about the computations from behavioural data. Do we need to measure brain activity at all? DW: Oh, we certainly do! I very much believe in Marr’s levels. I tend to focus on the computation and the algorithm. You know, what problem does the brain have to solve? What algorithms does it use? But clearly in the end we want to be able to understand where the algorithms are instantiated and, more than that, I think brain imaging can help differentiate between algorithms. I think the model-based approach, which has become very popular, is incredibly impressive! I guess if that had been around 20 years ago when I first started out, maybe I'd have got involved in imaging. That seems a very beautiful way to go. NK: In humans we can get tens of thousands of channels of haemodynamic activity. In animals, at the circuit level, we can measure individual neurons, and much larger numbers of them than before. Do you prefer humans or animals? DW: I work on the control of movement. I think animals have everything we need to understand most of the control of movement. I think people who work on language are a bit stuck in terms of the model systems they can work on. For me the neurophysiology level is an area I follow probably more than imaging, because most animals can move, most of them can move better than we can move, and so I think some of the circuit work from say places like Janelia Farm is really exciting. My field relies on very simple things like robotics and virtual reality, which have improved. But if you look at the improvements in imaging and in particular neural circuit manipulation, those are just extraordinary molecular techniques.

But it's still incredibly useful even for motor behaviour because there's no reason to believe that a rat does motor control the same way a human does. We have very different bodies; a very different understanding of the world. So I think it's incredibly useful. NK: Toward an overall understanding of brain function, do we go from the bottom up or from the top down? DW: We go both ways and they meet in the middle! It's hard to start from the middle. I think from the top down, we can get the algorithms. It's very hard for people who work on circuits to start with high-level questions. They're just trying to understand how the circuit works. But for some circuits, we've made huge advances in understanding the algorithms. So, I am very impressed with people who work, for example, on the cerebellar structure in electric fish, where we know it does prediction of sensory consequences, but it wasn't really known exactly how it did it. But recent work from Columbia and Nate Sawtell has beautifully shown how that circuit works. That work is just spectacular and probably wasn't achievable ten years ago. NK: AI is finally beginning to work – using models inspired by the brain. Is AI relevant to neuroscience? DW: AI has been very successful in a limited number of tasks, tasks which are very clear like the game of Go. That's a very simple state of this board and a very simple objective to win. When it comes to more general tasks, like having an autonomous agent acting in the real world, it's much harder to write down the cost function or what the algorithm should be to achieve success in the world. In robotics, closer to my area, there have been some successes. [Boston Dynamic’s] BigDog robot can walk over terrain. But then there are the really hard tasks, like manipulation, that are still unsolved. Robot control is generally solved one task at a time. So a robot is hand-tuned to solve one task. And if you want to go to another task, you go back to square one and start all over again. One of the big challenges for the future is how you make general-purpose algorithms, which can learn multiple tasks and interact in multiple environments. That's still a very hard problem. At the moment, there's no robot with the dexterity of a five-year-old child in terms of manipulation: very good for things like driving and navigation, but when it comes to tactile things with the arms and hands, they're really in their infancy. NK: Ultimately, we need computational models that actually perform the function. DW: In the end that's the proof in the pudding. But unfortunately sometimes you can reproduce the function without understanding it any better. So one of the frustrations with deep nets is that they may work, but you don't really know why they work. But it's still great that they work. I think there's a tension. There's those of us that want beauty in the algorithms, as well as them working, and those who just want the algorithms to work. And so, those who believe in normative and Bayesian models are a bit frustrated, I would guess, by the deep nets, because they work so well; when you want the normative, optimal solution to work better. NK: Finally, I'd like to hear about your interests and obsessions beyond science. DW: I guess my real obsession is science. I tend to work on science most of the time and I just love working at weekends and evenings on science. But my other obsessions; I have two daughters who are both in the sciences. Next month, all being well, my eldest daughter will become a doctor! My youngest is a chemist, and so they're both at university. We spend a lot of time with them. I guess one of my obsessions is travel. As a family, we love traveling. So we spend a lot of time together, me going to fun meetings in China, India, and South Africa, and they often join me and that's an absolute joy. Thanks to Simon Strangeways for video recording and to Jenna Parker for transcribing the interview.

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

BLOG HOME

Archives

January 2024

|

RSS Feed

RSS Feed